Hey there:

Thank you very much for this, I very much would support a Moderation channel here, although maybe for clarity (since this is both a technical and a community/admin forum) Moderation and Moderation-tooling might be two different channels with very different audiences. This report might be relevant to both, but it is tooling for moderation that strikes me as most urgent to design, specify, and implement together across architectures.

I also feel like you should not have to apologize for the centralization-favoring recommendations of the authors, it is on this community to propose alternatives with feature-parity and outcome-parity if we do not want to be centrally-moderated. CSAM in particular has a tendency to trigger governments hitting software with blunt objects if outcomes dip below the number of “9s” specified in their SLAs with their customers (i.e. their constituents). Implementers and instance operators may have to work together to avoid this ugly fate.

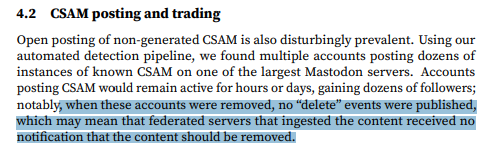

While I’m in an alarmist mood, let me also point out this worrisome detail:

This strikes me as an issue for not just Moderation and/or Moderation tooling but a core interoperability issue with catastrophic legal implications for instance operators across implementations. I.e., if:

A.) Moderators don’t have or know how to use interfaces for “nuking” toxic accounts, or if

B.) Nuked accounts aren’t transmitting Delete activities to all followers on other instances (regardless of server), or if

C.) The servers from which followers subscribed to toxic content aren’t executing those Deletes and Tombstoning those activities and any attachments/content,

then operators of other instances could be at second-hand risk by no wrong-doing of their own or those of the implementers of their server software ![]()