Esteemed Fediverse,

how could we represent time-based content like e.g. in video subtitles?

How could we bridge the efforts of WebVTT and ActivityPub?

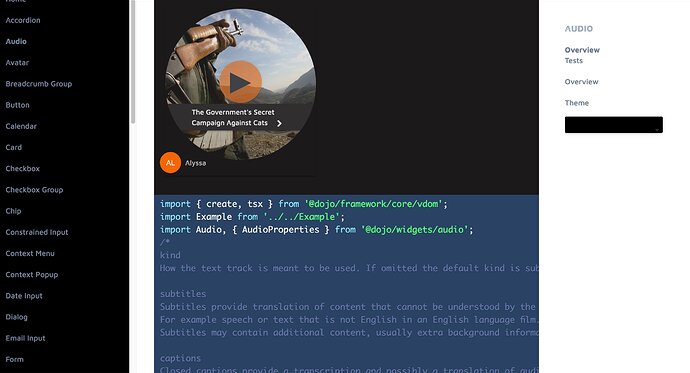

redaktor is now porting over some of the “Audiovisual” ActivityPub widgets from the private repo to http://github.com/redaktor/widgets-preview/ –

The general reactive architecture is:

Each ActivityPub Type (much) gets their own widget/webcomponent with visual representations for rows / columns (e.g. cards) and full pages.

With nojs fallbacks and progressive enhancement in a nice, themed and modular environment [a huge thank you to Ant, Dylan and all dojo.io contributors, you rock. While evil people use Facebook’s “React” (/wink)].

Conformant to the specifications

• ActivityPub

• Activity Vocabulary

Place and Time is in the core of redaktor already (maps, events, calendars etc.)

And the types Audio and Video do add “Time”.

The magic is now that we can add more meaning and continuative content while e.g. the Audio is playing.

Because redaktor also supports multiple people creating one content (which is btw a MUST in ActivityPub, but anyway, giving up to preach …):

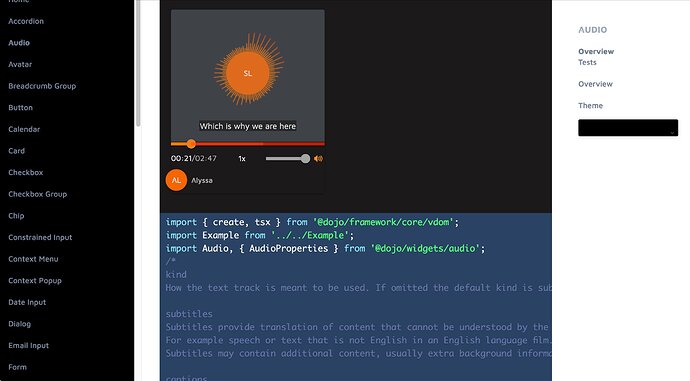

It also supports a visualisation of the current speaker in e.g. a podcast.

It only needs an ActivityPub object, nothing else but it supports also .vtt or .srt files with “Captions”, “Subtitles”, “Chapters” and “Metadata” (btw, did I mention that readaktor/ramework now has full support for Dublin Core, IPTC, XMP and ID3-TAG, yay?)

However:

We can cite any ActivityPub content at a certain startTime either until endTime or for the time of duration.

(redaktor players have “speed” as you see but we can adjust the times then …)

Anyway:

What should be the favourite way for time based content in ActivityPub?

- The native way would probably be (???) :

To support time-based content the type SHOULD be Event, Audio or Video.

This ActivityPub Object MUST have a valid startTime

This ActivityPub Object MUST have either a valid endTime or duration or both.

Time based content applies to the properties name, content, summary, icon, image, instrument, location (including Natural Language equivalents like nameMap etc.)

and attachment –

so other ActivityPub Activity, Link or Object s can be included via attachment …

name, content, summary (incl. langString) would behave like a Caption-Track does in WebVTT / HTML5

icon, image would be to put emphasis (e.g. icon = the circle in mastodon’s player or image like a slideshow)

The other things could e.g. be shown under the player control to add more magic …

Time based content applies

- if one or multiple of the above properties have either a valid

startTimeor validendTimeor both

and

- if these times fall in the constraints of the main

startTimetoendTime

A missing endTime would imply “show until end” while a missing startTime would imply to “show from beginning to endTime” …

Pro: It’s all ActivityPub.

Con: It does not support Actor [e.g. the current speaker in a podcast] OR:

Should we send the Actor in attachment?

General questions:

How to explicitly tell to use it this way:

- The

typeof the main object can be multiple["Event", "Service", "https://redaktor.me/AP/timeBased"]

or

- something like as:timeBased

Re. multilanguage we cn build menus in the same way than for WebVTT subtitles, captions etc.

For the html track assume as:href = src, as:hreflang = srclang and as:name = label

What is missing would be the kind (meaning) for the track and this is very important, see MDN

In the specification startTime etc. is defined rather vague.

Time based content can have 2 meanings, like

• real time (the main object’s time is the real time of the content like e.g. a plenary session, event livestream or similar and all times are related to this)

• virtual time (the real time does not matter, it is more a generic time set to support additional content like e.g. podcasts or music)

VS

WebVTT approach

We can have .vtt or .srt files as attachment

Then kind=“metadata” and JSON ActivityPub content below the times

The only difference would be that here the times would be the top identifiers and then the JSON (id) while in ActivityPub the times are a member of an Object (id) …