Interestingly alongside this discussion in quick succession we have found 5 protocols already that dedicate to being lite versions of Linked Data:

- An Introduction to The Nostr Protocol posted by @melvincarvalho

- The Block Protocol posted by me

- Atomic Data: Easy way to create, share and model linked data posted by @SelectSweet

- Meld (m-ld): Live information sharing maintained by @gsvarovsky

- RDF-DEV Community Group working on EasierRDF and RDF-star open standards posted by me

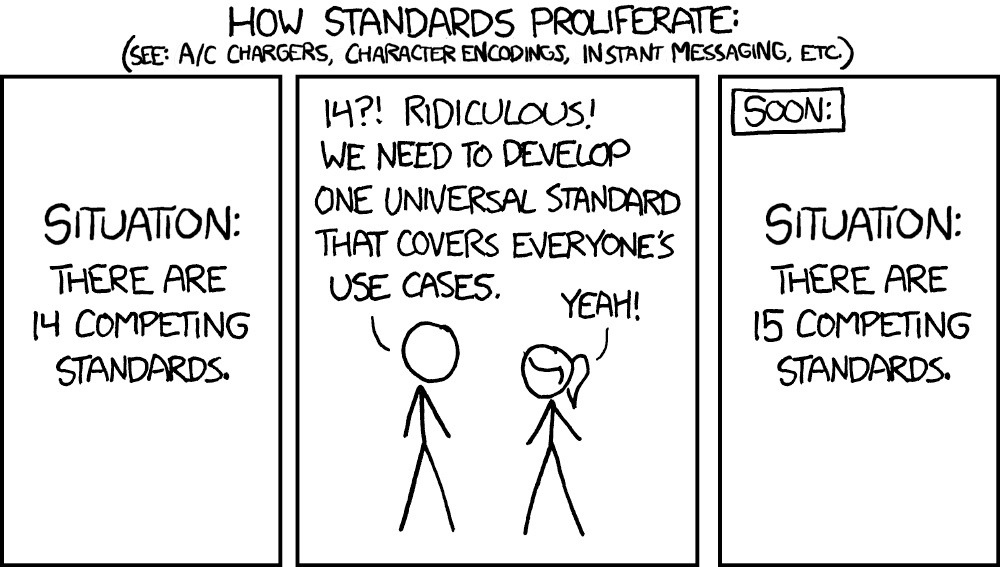

They all look like great initiatives to me, with a focus on practical application, reducing linked data complexity. There are parts that are complementary in them, and other parts that are overlapping, different ways of doing things. They all relate to underlying (W3C) linked data standards in different ways. Which bringes me to the most quoted XKCD cartoon:

But maybe this is not applicable at all because of sufficient linked data standards compliance of each of these protocols, and they all just serve to give developers more choice in how they design their apps without entering competing ecosystems by doing so.

At least I think that each of these projects would be best served to keep a wary eye on providing that compliance and retain a good level of interoperability.

Probably I should start collecting these protocol projects in the delightful project, and any PR’s / Issues are most welcome:

Update: Interesting follow-ups on the Solid companion thread being posted…

- Generate UI components from RDF: GitHub - jeff-zucker/solid-ui-components: generate high level HTML widgets from RDF data

- TerminusDB’s TerminusX cloud service for building linked data apps (proprietary addon to OSS db, but conceptually interesting)

- LinkML general purpose modeling language for linked data