I want to copy part of a discussion I had on Matrix, so as to ‘archive’ it. I guess my overall point is this:

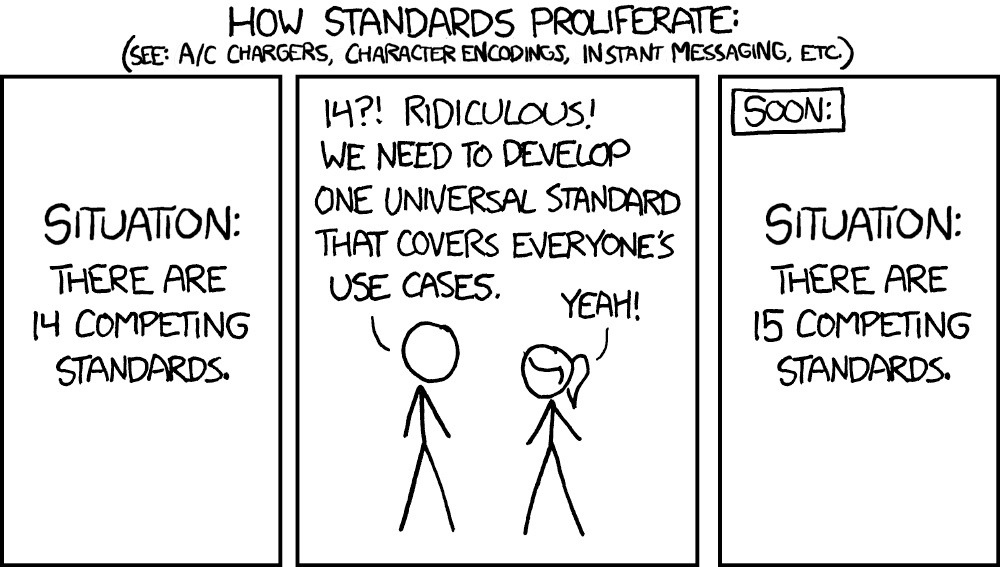

Without well-defined processes and the community organization and tools to facilitate the social interaction to keep them going, an ecosystem will only evolve by Ad-hoc Interoperability and will suffer the downsides - stalling or exponential complexity - from that in the long term.

openEngiadina matrix chat ..

On the openEngiadina matrix room I asked the following question:

There is an, I think, interesting discussion on Solid forum ‘Is RDF “hard”?’. It boils down to that no matter how you turn it Interoperability is really hard, especially ‘semantic interoperability’ (expressing universal semantic meaning). For Solid community I recommend highlighting more of the Process side of software development and its Social aspects besides the deep technical focus.

In this regard I am curious to hear how openEngiadina envisions the semantic network to be built over time when it is in production. I guess the focus on local knowledge already solves a lot of the problem, and with UI widgets tailored to RDF constructs you can make it easier to crowdsource content aggregation. But still there’s a lot of different knowledge to be modeled and potentially reused across platforms.

To which @pukkamustard gave this answer:

I completely agree. Interoperability is hard, especially semantics.

Agreeing on semantics is the same as agreeing on a certain world view and classification of concepts. It is an intrinsically social endeavor that requires understanding how people think and conceptualize thing. Then you need to find a basis that everybody agrees on that is simple enough to be formalized. It’s not easy. In fact, I think trying to find a universal semantic that works for everybody is futile. Luckily, we don’t need universal semantics.

In my opinion, the most important thing in RDF is the open-world assumption - the fact that you never have complete knowledge over anything. If you hold a piece of RDF data and think that is represents a box, you can not assume that other people also think that the piece of data represents as a box - to them it might represent a musical instrument. This might be because other people have different data on the thing or because they have a different semantic understanding of the thing.

Even if two parties have two completely different understandings of something, the thing might still be described with properties that both parties understand. For example the thing might be annotated with a geographic position using some semantics (vocabulary) that both parties understand (e.g. W3C Semantic Web Interest Group: Basic Geo (WGS84 lat/long) Vocabulary). So even if two parties can not agree if the thing is a box or a musical instrument they might still be able to agree that the things is located at a certain place.

A contrived example, but I hope this shows that RDF does not require us to agree on a universal semantics. If we share partial semantics we can already go very far. Luckily there is already an established and rich collections of specialized semantics/vocabularies that we can re-use (e.g. DC Terms, Geo, ActivityStreams, The Music Ontology). These can be used to make the common basis of understanding larger.

In terms of openEngiadina and local knowledge: Local Knowledge is not only the representation of physical things that are bound to some locality. It is also a conceptualization of things - a semantic - that is shared in a locality. The way I envision things to grow: Start with your own small local semantics and grow by finding larger common semantics.

And my response:

Thank you for this elaboration pukkamustard

Some time ago I came upon some articles by Kevin Feeney of TerminusDB.com In one article he’s advocating to (at least start with) having closed world interpretations of semantic content:

However, if I have a RDF graph of my own and I want to control and reason about its contents and structure in isolation from whatever else is out there, this is a decidedly closed world problem

And in the next part he elaborates on some big issues with Linked Data:

the big problem is that the well-known ontologies and vocabularies such as foaf and dublin-core that have been reused, cannot really be used as libraries in such a manner. They lack precise and correct definitions and they are full of errors and mutual inconsistencies [1] and they themselves use terms from other ontologies — creating huge and unwieldy dependency trees.

Just now I am discussing this with @melvincarvalho. I feel that when we develop apps we naturally go from closed world vocabularies, and that the incentives to keep these interoperable enough with other apps that are out there, should be taken care of by good Processes and Social interaction.

Not taking these into account will mean we either go towards - what I call - Ad-hoc Interoperability (what the fedi has: learn from code and create your own flavour of interop from that), or striving for Universal Interoperability and all the complexity that comes with that.

@pukkamustard reply:

However, if I have a RDF graph of my own and I want to control and reason about its contents and structure in isolation from whatever else is out there, this is a decidedly closed world problem

I agree with the premise. I don’t agree that means we need to throw out the open-world assumption. I think a solution is that we need to be able to decide finely what data is used for reasoning and what not.

I feel that when we develop apps we naturally go from closed world vocabularies

I agree and really think this is what we need to change. We should start thinking open-world by default.

@how’s follow-up to that:

I guess there’s a question of perspective: on the one hand, the ‘closed world’ perspective makes sense when you’re manipulating your own data. But when other do manipulate your data, and when you describe your vocabulary, and when you conceive data usage, you should always leave space for other things to happen, i.e., consider the open world assumption.

And finally my response..

I once saw a toot by @dansup saying something like (paraphrasing): “Oh, it is so nice to be working on new federated features that do not yet exist, as I can just “invent” what I need on-the-fly”. A completely understandable notion. Focus on the own app’s needs, not having to draft a spec, negotiate with others. There are no others yet. Most fedi devs are open to make changes later if that increases interop opportunities. Unless they became too deeply invested on a certain way of doing things and unwilling to change. If they are a dominant project in their domain, then that will become an issue.

I agree and really think this is what we need to change. We should start thinking open-world by default.

Yes, I think so too. But the reality is that this takes a significant extra effort, and initially this rests with the dev who 1st needs a new AP extension but is mostly interested in delivering a good MVP app at that time. The potential win-win of going the extra mile is somewhere in the future, when others want to interoperate.

We should streamline the process of ‘offering an extension’ to others as much as possible, lowering the barrier, and include the steps in which it can be iterated and mature for more open world application.

Right now the practice shows there’s too little incentive to engage in the process, and ad-hoc interoperability is the only way forward.